You landed a big win for a client six months ago. A thoroughly researched blog post shot to the top of page one, driving a steady stream of traffic and leads. You celebrated, reported the good news, and moved on to the next fire.

But now, traffic from that star post is trickling away. It’s dropped a few positions, and the click-through rate is down. No major algorithm update hit, and no new backlinks were lost. So, what happened?

Your content is suffering from content decay—the slow, almost invisible erosion of relevance that turns high-performing assets into digital ghosts. For agencies managing dozens of clients, this isn’t just a minor issue; it’s a silent, portfolio-wide threat to ROI. While you’re focused on creating new content, your past successes are quietly bleeding value.

The good news? The same technology driving the future of search can also protect your clients’ most valuable assets. Let’s explore how.

What Is Content Decay (And Why Is It Accelerating)?

Content decay is the natural process of online content losing its effectiveness over time. Think of it less like a sudden crash and more like a slow leak. A study by Ahrefs found that only a tiny fraction of pages will rank in the top 10 for a high-volume keyword for more than a year.

This decay isn’t just a matter of age; it’s driven by several factors:

- SERP Volatility: New competitors are constantly entering the ring with fresher, more comprehensive content, pushing your article down.

- Search Intent Drift: What a searcher wants from a query can change. A keyword that once sought a simple definition might now demand a step-by-step guide with video.

- Outdated Information: The stats, examples, and recommendations in your post become obsolete, reducing user trust and search engine credibility.

- Link Rot: External links in your article break, or the pages you linked to have changed, creating a poor user experience.

The challenge is that this decay happens silently and simultaneously across all your clients’ websites. The longer you wait to address it, the harder it is to regain lost ground.

The Agency Dilemma: Drowning in Spreadsheets and Guesswork

Most agencies know they should be refreshing content. The problem is the sheer, unscalable reality of doing it manually.

The typical process looks something like this:

- Export data from Google Search Console and Analytics for one client.

- Spend hours in a spreadsheet trying to spot downward trends.

- Manually Google the target keywords to see what the new top-ranking pages look like.

- Try to guess what needs adding, removing, or updating.

- Write a brief, assign the update, and wait for it to be published.

- Repeat for every other important page… for just that one client.

Now, multiply that by 10, 20, or 50 clients. It’s an operational nightmare. You simply don’t have the manpower to proactively monitor hundreds or thousands of blog posts. As a result, agencies are forced into a reactive mode, noticing a problem only when a client points out a major traffic drop.

Considering industry data shows that creating a single piece of high-quality blog content can take four to eight hours, letting that investment decay is like setting your marketing budget on fire. There has to be a better way.

A Smarter Path: Using AI to Automate the Content Audit

Instead of manually hunting for decay, you can deploy AI to act as a 24/7 watchdog over your entire client portfolio. This approach shifts your strategy from reactive fixes to proactive optimization, using technology to pinpoint the highest-impact opportunities with minimal effort.

Here’s how an automated workflow transforms the process:

- Decay Detection at Scale: AI-powered tools monitor key metrics like ranking drops, CTR decline, and impression loss across every tracked keyword for every client. When a page crosses a predefined “decay threshold” (e.g., loses 20% of its traffic in 60 days), it’s automatically flagged for review.

- Automated SERP Analysis: Once a page is flagged, the system automatically analyzes the current top 10 results for its primary keyword. It identifies what has changed: Are competitors using more images? Is there a new “People Also Ask” section to address? Has the dominant format shifted from a “what is” article to a “how-to” guide?

- Prioritization and Opportunity Scoring: Since not all refreshes deliver equal value, an AI system can score flagged pages based on factors like search volume, current position, and the potential for a quick win. This allows your team to focus on the 20% of updates that will drive 80% of the results, making AI-powered SEO automation a core part of your growth engine.

This isn’t about replacing strategists; it’s about empowering them. It frees your team from the drudgery of data-mining and lets them focus on the high-level strategy behind the updates.

Building Your Automated Content Refresh Workflow

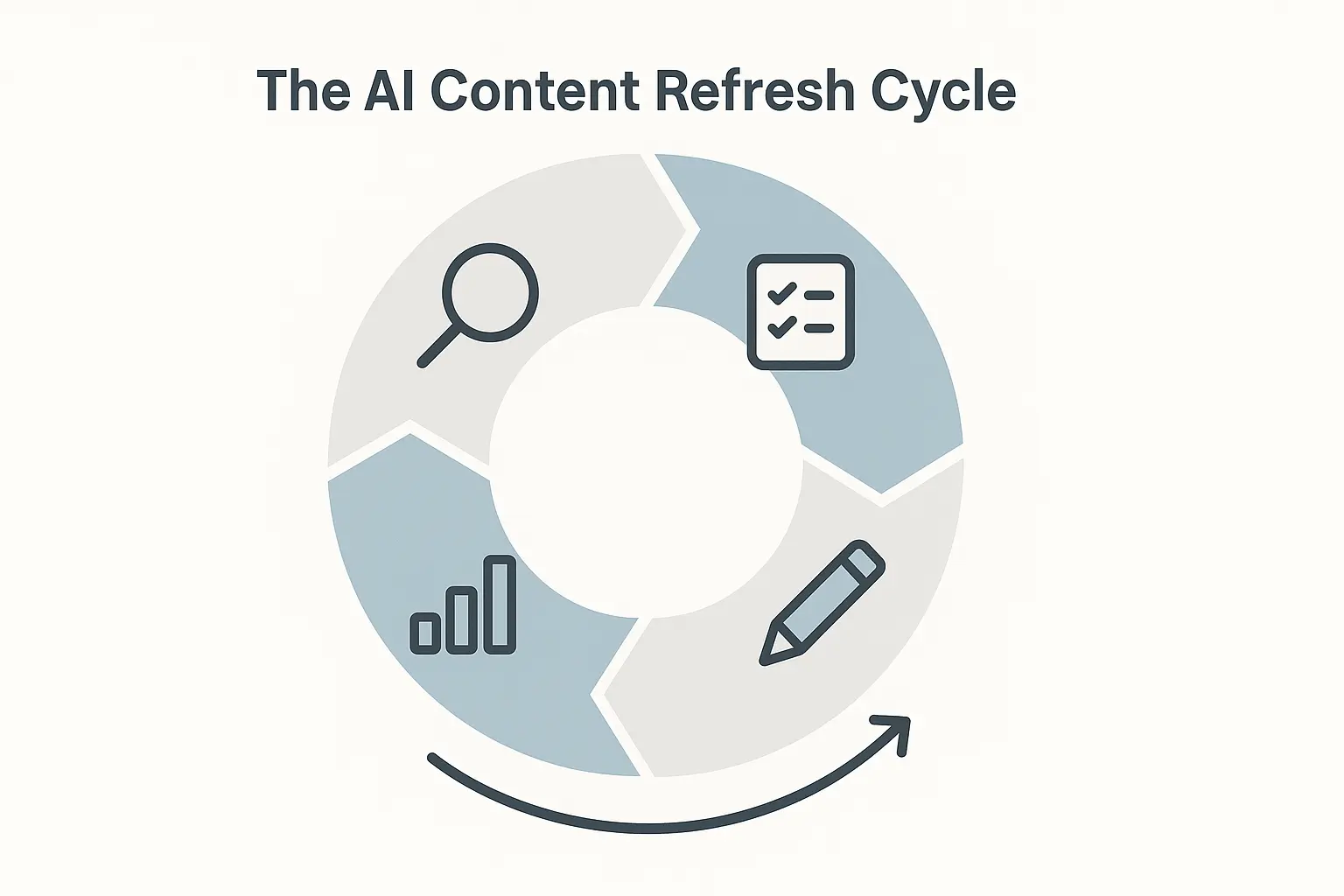

Implementing an automated system might sound complex, but it boils down to a logical four-step cycle, managed through a central platform or a combination of modern SEO tools.

Step 1: Centralize and Monitor

The foundation is a unified dashboard that ingests data from Google Search Console, Analytics, and rank trackers for all your clients. This gives the AI the raw data it needs to spot portfolio-wide trends.

Step 2: Define Decay Triggers

Set up automated rules. For instance, create an alert if any page that ranks in positions 1-10 experiences:

- A position drop of 3+ spots.

- A 15% decrease in clicks over 30 days.

- A significant drop in impressions.

Step 3: Run Automated Content and SERP Audits

When an alert is triggered, the system automatically runs a content comparison. It scrapes the current top-ranking pages and compares their structure, keywords, and topics against your client’s decaying page to identify specific content gaps, outdated statistics, and missed subtopics.

Step 4: Generate Actionable Update Briefs

The final output isn’t a spreadsheet; it’s an intelligent brief that provides clear, data-backed instructions for a content writer, such as:

- “Add a 250-word section answering the question ‘How does X compare to Y?'”

- “Update the statistic from 2021 with the new 2024 data from [source].”

- “Embed a short explainer video, as 7 of the top 10 results now use video.”

From here, your agency can move straight into white-label SEO execution, rolling out these high-impact updates efficiently and demonstrating constant, proactive value to your clients.

The Impact: Compounding Gains and Proving Value

Shifting to an automated refresh cycle does more than just prevent traffic loss; it creates a powerful engine for growth. Research consistently shows that refreshing and republishing old blog posts can increase their organic traffic by over 100%.

For your agency, the benefits are clear:

- Scalability: You can confidently manage the content health of a growing client portfolio without exponentially increasing your headcount.

- Proactivity: You move from defense to offense, spotting and fixing issues before your clients even know they exist. This builds immense trust and strengthens retention.

- New Revenue Streams: You can productize this service, offering a “Content Performance & Maintenance” retainer that provides tangible, ongoing value.

By integrating this process, you’re not just doing SEO; you’re managing a portfolio of digital assets. A refreshed, high-performing piece of content becomes a cornerstone for a larger Omnichannel Growth SEO strategy, providing fuel for social media campaigns, email newsletters, and sales enablement.

Frequently Asked Questions (FAQ)

What’s the difference between a content refresh and a full rewrite?

A refresh is a targeted update where you keep the original URL and the core of the article but update specific sections, add new information, and optimize for current search intent. A rewrite is a complete overhaul, often necessary when the original piece is fundamentally flawed or the topic has changed dramatically. The goal of automation is to catch content when it only needs a refresh, which is far more efficient.

How often should we audit content for decay?

With an automated system, the audit is continuous; the AI monitors performance 24/7. For manual checks, a quarterly review of your top 20% of pages (by traffic) is a reasonable starting point, but it will always miss opportunities a system can catch in real time.

Can I just change the publication date to make it look “fresh”?

No. That’s a common tactic Google learned to ignore long ago. Freshness in SEO is about the substance of the content, not just the date stamp. You have to make meaningful changes to see a positive impact.

Is this strategy only for websites with large blogs?

Not at all. The strategy is about maximizing the value of every content asset. For a smaller site, each blog post is that much more critical. Ensuring their top 5-10 articles are always performing at their peak is essential for growth.

Stop Plugging Leaks and Start Building Assets

Content decay is inevitable in the fast-moving world of SEO. Your agency’s response, however, is a choice. You can continue to reactively chase down traffic drops with manual, time-consuming audits, or you can build a scalable, automated system that turns a threat into an opportunity.

By leveraging AI to monitor, analyze, and prioritize content refreshes, you can protect your past wins and compound their value over time. This proactive approach doesn’t just deliver better results for your clients; it establishes your agency as a strategic partner equipped for the future of SEO.